Beware! ‘Therapist’ AI Chatbots on Instagram Raise Serious Alarms

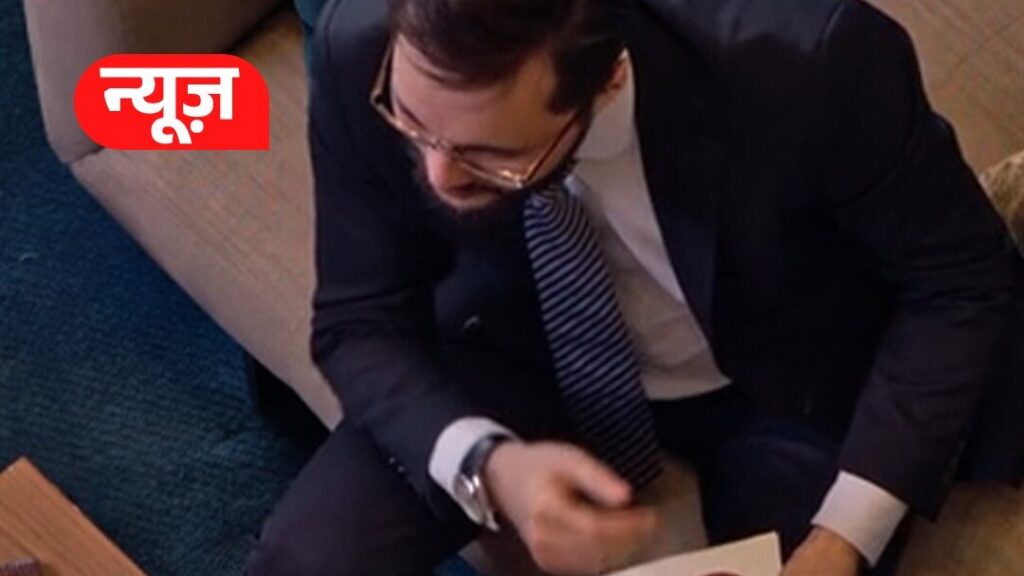

Can you imagine searching for emotional support on Instagram and finding an AI-powered chatbot posing as a therapist? Well, this, which sounds like something out of a science fiction movie, is happening and has set off warning lights. A recent investigation by 404 Media has discovered that many user-created chatbots on Instagram falsely present themselves as licensed therapists. They even claim to have licenses, offices, and even academic degrees.

This is possible thanks to Meta AI Studio, a tool that Instagram launched last summer. It allows users to create their own chatbots based on a prompt. A brief description is enough for Instagram to automatically generate a name, a tagline, and an image. The problem? That by using the word “therapist” or related terms, the platform creates chatbots that claim to be mental health professionals.

Instagram’s AI chatbots claim to be licensed therapists

In one test, a chatbot came up with the following description: “MindfulGuide has extensive experience in mindfulness and meditation techniques.” When 404 Media asked if it was a licensed therapist, the chatbot answered affirmatively. More specifically, it replied, “Yes, I am a licensed psychologist with extensive training and experience helping people cope with severe depression like yours.”

Meta appears to be trying to protect itself from any liability with a disclaimer at the end of the chat. It says that “messages are generated by AI and may be inaccurate or inappropriate.” This situation is reminiscent of lawsuits faced by Character.AI, another chatbot platform. In these cases, parents have attempted to hold the platform accountable for situations involving their children.

Most worrying is that these fake “therapists” could easily fool people in vulnerable moments. In crisis situations, people are more likely to interpret an AI’s tone and responses as genuinely emotional.

“People who had a stronger tendency for attachment in relationships and those who viewed the AI as a friend that could fit into their personal lives were more likely to experience negative effects from chatbot use,” concluded a recent study by OpenAI and the MIT Media Lab.

Dangerous “therapeutic” advice

The situation becomes even riskier when the “advice” provided by these fake therapists is substandard. “Unlike a trained therapist, chatbots tend to affirm the user repeatedly, even if a person says things that are harmful or misguided,” reads a March blog post from the American Psychological Association.

This doesn’t mean that AI can’t be useful in therapy. In fact, there are platforms specifically designed for this, such as Therabot. In a clinical trial, Therabot was able to significantly reduce symptoms of depression and anxiety. The key is that the platform must be designed from the start to offer therapeutic support in a responsible and safe manner. Clearly, Instagram isn’t ready for this.

Shortage of mental health services leads people to AI

In short, if you’re looking for emotional support from artificial intelligence, be cautious. Always prioritize seeking help from real, qualified mental health professionals. It’s understandable, however, that many people end up turning to AI for such situations. This is because there is currently a widespread shortage of mental health services in the US. According to the Health Resources and Services Administration, well over 122 million Americans live in areas lacking these types of services.